Getting Started¶

While training a Neural Network, often our approach is just focused on the end output of the model. Thinking about the stages a model goes through during training is seldom our approach when we think of analyzing the model performance or behaviour in a certain situation.

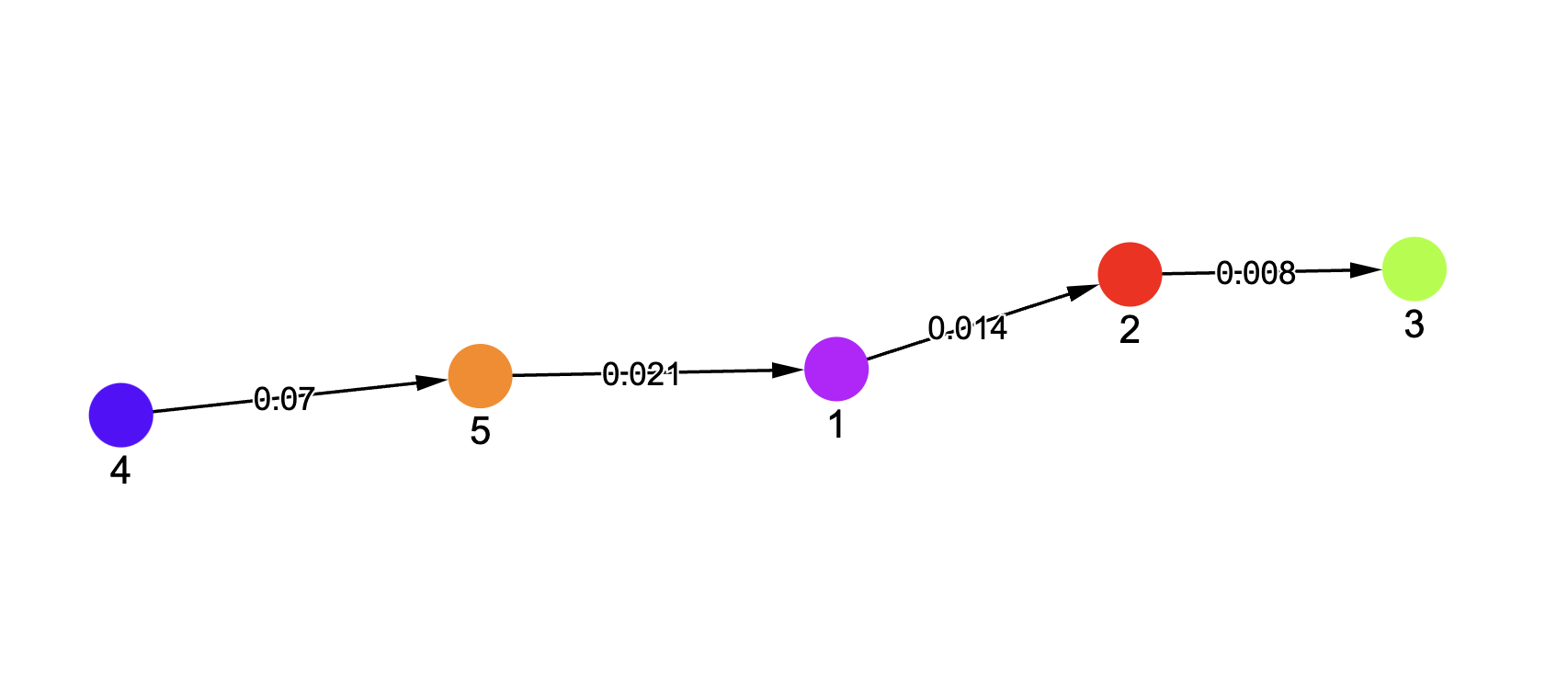

Our effort is to bring a change in that approach and offer a deep dive into those stages and above figure is a sneak peek into that. It shows the stages a neural network goes through during the training process.

Latent States¶

The stages can sometimes be referred to as Phases as well as Latent States and they can be defined as a state where the characteristics of the model are broadly falling under one consistent definition. For ex: A model can sometimes go through three stages like:

Model is memorizing the Training Data (indicated by increase in training accuracy)

Model has memorized the training data but doesn’t generalize well (Good training accuracy but bad accuracy on validation data)

Model is able to generalize well on unseen data as well. (Good accuracy on both training and validation data)

Training Dynamics¶

Transition between Latent States is often a result of sudden changes or inflection points in one or more defining metrics of the model performance. A sequence of these transitions is termed as a Generalization Strategy or Training Dynamics or a Trajectory

Our package here, offers a solution for analyzing and visualizing Training Dynamics for a deeper analysis of generalization strategies adopted by Neural Networks.

This library is heavily insipired by the early work done by Michael Hu for the paper Latent State Models of Training Dynamics.

To get a deeper understanding of the inner workings check the Step By Step Guide

Installation¶

pip install visualizing-training

Import the library using

import visualizing_training

Key Features¶

Key features include:

Interactive Visualization of Training Dynamics & Generalization Strategies in the form of network graphs and line charts.

Latent State predictions using Hidden Markov Models (HMM) to analyze the Training Dynamics

Flexibility to define your own Neural Network architecture (currently it supports Transformer, MLPs, Resnet, LeNet5)

Add custom hookpoints to collect data at different stages of the architecture

Calculation of select metrics on the data collected